The Cerebello – dreamed up and crafted by yours truly, with some help from Midjourney.

I’m excited to bring you this taster of Nutopia’s upcoming, premium IN FOCUS series.

A prelude to a new beat

When a TikTok user Ghostwriter977 used synthetic voice technology to create an artificial collaboration between Drake and the Weeknd, some of my friends were certain it was a campaign for an actual collaboration between the two pop stars. So good was it that many thought the song was the real deal and the AI theme was merely a stunt playing on the cultural zeitgeist.

The Weeknd-ish & Drake-ish

Today we know it was an AI fake, but it amassed around 10 million views on TikTok and was streamed 629,439 times on Spotify before being pulled on April 18 due to copyright claims. While Drizzy took on AI on social media, he also undoubtedly benefited from the event, which sparked a surge in ‘Drake’ search interest.

Since then, we’ve seen many other well-known AI artists pop up, with Frank Sinatra singing ‘Gansta’s Paradise’ and Johnny Cash belting out potpourris, including the very timely and very un-Cashy ‘Barbie Girl.’ David Guetta recently had Eminem rap to one of his songs in a live performance without Eminem’s permission—or even contribution.

More serious efforts are also being made. Producer Timbaland has used AI to resurrect non-other than Biggie Smalls to rap over his fresh beats, and Paul McCartney brought back John Lennon for The Beatles’ last song. Midnatt, a K-pop artist from Hybe (of BTS fame), released his latest album in six languages, including Korean, Chinese, English, Japanese, Spanish, and Vietnamese, using the voice-augmenting AI technology from Supertone.

Biggie Smalls-ish

Deep roots, fresh notes

The professional music industry has long used sophisticated data for many purposes. Music Intelligence Solutions’ Hit Song Science tool and Uplaya website analyzed hits and predicted future ones already 15 years ago for artists like the Black Eyed Peas and Outkast.

More recently, HITLAB has built success on similar but more advanced hit prediction software and has had a fair amount of success, blossoming into a conglomerate including HITLAB Music Group label, HITLAB Publishing, HITLAB Media, and even HITLAB Fintech.

However, the recent AI wave is different.

Today’s tools provide near-universal access to formidably capable online AI tools that anyone can use to churn out new music, from interesting experiments to flat-out ripoffs. This has raised urgent questions about the future of the music industry, the role and rights of human artists, and the essence of musical creativity itself.

Mathematics and melodies: a perfect harmony

Notation is something mathematics and music share

Computers and music got along well from the start. Music is deeply steeped in numbers and ratios, providing fertile ground for computer processing. From Bach to Beyonce, music adheres to mathematical patterns. And patterns, clustering and classification are what AI does.

As early as the 1800s, the mother of the modern computer Ada Lovelace dreamt that the computer could one day be used for composition. Her vision can be seen as an astonishing prophecy, as the first actual computer ENIAC was then more than 100 years in the future.

In 1956, only 11 years after ENIAC’s introduction, composer Lejaren Hiller and composer-mathematician Leonard Isaacson created the ‘Illiac Suite,’ the first musical piece composed by a computer. Hiller also released the first book on computer composition in 1959.

The quantifiable nature of music’s rhythm, tempo, pitch, melody, harmony, themes, motifs, and progressions are fundamentally mathematical structures. So are concepts like attack, sustain, decay and release. This makes music an ideal language for also today’s AIs.

The most commercially lucrative patterns are also simple. Some industry insiders say over 80% of hit songs can be traced back to similar four-chord progressions. This is a pattern repetition and predictability level that even simpler AI thrives on.

Loops that feed themselves

As the digital data explosion creates vast libraries of musical data spanning centuries and encompassing various genres, styles, and cultures, from Gregorian chants to Grime become a commonly known and accessed language. Generative AI systems have a rich tapestry to learn from and feed back into as they generate new musical material.

In this way, AI can act as a parallel particle accelerator, colliding together unexpected styles while vetting them for mathematical compatibility. Wholly new genres can emerge with this sped-up experimentation as AI can, with relative ease, generate new pieces that reflect the learned musical language with new and unique bents but with consistent quality.

The content explosion of recent years also demands vast amounts of new music. Creating scores for content, apps, games and films becomes easier with AIs that compose faster than humans and do it at scale. Customization and personalization become possible, allowing music that changes dynamically according to the listener's tastes, preferences, behavior, and context.

While AI excels in analyzing patterns and creating technically ‘sound’ music, it lacks the emotional understanding, cultural context, and creative intuition humans possess. At its best, music is about connecting with listeners profoundly and emotionally. No AI can recreate the emotion of Adele's "Hello" or the resonance of Kendrick Lamar's "Alright."

At least not yet.

A symphony of destruction? Shaking the industry's foundations

AI's sonic boom is heard loud and clear in the music industry. It's a mix of fascination and dread for industry veterans, and not without reason. The ability of AI to mimic artist voices, churn out tunes at an unprecedented rate, and infiltrate the market with fresh tracks at pace places revenue streams at risk and the brands of traditional players on shaky ground.

Streaming platforms that become flooded with AI-crafted music can be manipulated, and their recommendation algorithms can be made to boost specific streams in the hope of lusher royalties. Amid rampant overproduction, the once distinct sound of an artist can dissolve into an ocean of abundant audio soup.

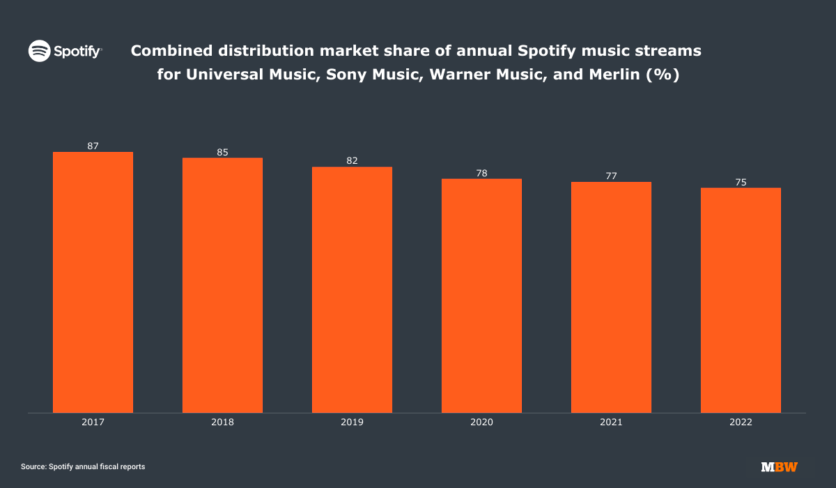

The prominent industry's share of Spotify streams has already dived from 87% in 2017 to 75% in 2022. The musical juggernauts - UMG, Sony Music Entertainment, Warner Music Group, and Merlin - are seeing their grip on Spotify streams slipping, gobbled up by the independent musicians, smaller labels, and artificial 'artists' that are all proliferating.

Source: musicbusinessworldwide.com

Around 100,000 songs hit the streaming services daily, with an average of 49,000 pieces being added to Spotify alone every day in 2022. This musical tsunami is partly due to the dubious tactics of AI companies and artists, as they upload the same song under various titles and aliases, eroding the share of major labels and the traditional music economy.

One extreme but plausible scenario is Spotify launching its proprietary AI artists armed with its catalog of all the world’s music throughout human history. Spotify has already been training its audiences to listen to vibe-driven playlists that downplay any individual artist’s role in the set. The above move would complete this play with repercussions that labels and artists dread.

Amid these apocalyptic overtones, industry leaders are fighting back to not repeat the mistakes of their digital past. UMG has asked streaming companies to block AI companies’ access to their services. The Recording Industry Association of America has initiated the Human Artistry Campaign, which has paradoxically been criticized for sidelining independent artists.

Spotify has thus far complied, recently removing tens of thousands of songs created with Boomy, a generative AI music service, after UMG flagged them for artificially boosting streaming volumes. Boomy is specifically suitable for creating the abovementioned ‘vibe’ songs whereby individual artists are less important, like ‘trip-hop for studying’ or ‘EDM for exercise.’

Playing a new tune: today’s AI tools in music

AI has got so influential so fast because it extends across the entire spectrum of music, from analysis to creation, presentation, distribution, and consumption. AIs audio applications also run from automating the more conventional practices to innovating in the more experimental arenas.

I – MUSICAL UNDERSTANDING

Musical Analysis

AI services like Moodagent and Musimap can analyze a song to determine its mood, genre, key, tempo, and other characteristics.

Music Recommendations

Recommendations are the most well-known application of AI in music. Spotify, Apple Music, Tidal and YouTube Music famously use ML algorithms to recommend songs to listeners.

II – MUSICAL CREATIVITY

Composition

Beyond analysis, categorization and recommendation, likely the most famous (or infamous) use of AI in music today is in composition and songwriting. Here we’ll find an explosion of new tools.

Tools like the deep neural network MuseNet and recurrent neural network MelodyRNN can analyze musical data and generate new compositions.

MusicVAE is based on variational autoencoders and generates music and scores specifically by interpolating between different musical styles.

The self-attentive Music Transformer possesses long-term coherence for more extended, self-aware, consistent compositions.

Amper Music is an AI music composer that creates unique, royalty-free soundtracks for various media projects.

Google’s MusicLM is An experimental AI app that generates songs based on simple text prompts, allowing users to turn their ideas into music.

AI can enable renewed takes on renowned songs and artists. One example is ‘Drowned in the Sun,’ a homage to Nirvana generated by Google's Magenta, using data from past recordings.

Lyrics

OpenAI's ChatGPT and other generative tools can be used to create lyrics in various styles and genres or for mimicking and mashing up artists.

Nick Cave recently dismissed ChatGPT’s attempt at Cave-like lyrics as a ‘grotesque mockery of what it is to be human.’ Another great David Bowie, however, played around with digital lyric randomizers for inspiration as far back as in the 90s.

But AI continues beyond composing melodies and coming up with words; it can also contribute to instrumentation and vocalization in traditional or more innovative ways.

III – MUSIC PRODUCTION

Instruments

Google's Magenta Project has developed an AI-powered synthesizer called NSynth Super that uses neural networks to generate new sounds from existing samples.

Vocals

Holly+ is Holly Herndon’s digital twin, a custom voice instrument that allows anyone to upload polyphonic audio and receive a download of that music sung back in Herndon’s distinctive voice.

Beyond individual instruments and vocals, AI can help orchestrate, arrange, mix and master audio at speed and scale and with impeccable precision.

Instrumentation and Arrangement:

AI tools like Amadeus Code and AIVA (Artificial Intelligence Virtual Artist) can create backing tracks or classical orchestrations in addition to songwriting help.

Sound Design and Mixing:

iZotope can analyze the spectral content of music and help master tracks, adjust volume levels, tune EQ balance, and even apply effects.

Mastering

One of the better-known tools is LANDR, a platform that offers AI-assisted analysis of tracks for effects, enhancing the overall sound and assisting in mastering.

IV – LIVE EXPERIENCES

AI has even stepped into musical co-creation on the fly, partnering up for live performances. As musical, lyrical and visual artists combine forces with architects, engineers, and technologists, interactive art experiences and installations are becoming more common.

Holly Herndon’s 2019 album "PROTO" used an AI baby ‘Spawn’ that can analyze, mimic and create human voices on the fly.

David Dolan is another example of an artist who uses AI for jazz-like improvised performances, complementing his piano playing.

Despite his brushes with AI, or maybe precisely because of them, Drake has also turned to AI to have a doppelgänger of himself live on stage with him.

Pop innovator Arca collaborated with UK's Bronze and the French artist Philippe Parreno for a musical installation in New York's MOMA that changes with e.g., temperature and crowd density.

V - BIOMETRIC AUDIO

Personalized musical experiences can today be based on biometric data and other contextual information to generate adaptive soundscapes for getting through the day or into the zone.

Physical Performance

Moodify creates personalized playlists according to preferences and desired moods to help with sleep, focus, relaxation, and exercise.

Mental Health

Companies like Endel use AI to generate soundscapes to improve focus, relaxation, and sleep, combating conditions like ADHD, insomnia and tinnitus. The soundscapes adapt in real-time based on the user's input, external factors and context.

VI – BUSINESS MODELS

AI can help artists and labels with their more business-minded efforts, from finding and enabling collaboration opportunities between artists to new revenue streams.

Audius is a decentralized music community and discovery database that enables artists and labels to opt-in to AI collaborations and interact with AI-generated tracks uploaded by others.

Grimes recently launched Elf.Tech, a software that lets people create new tracks with her vocals in exchange for 50% of the royalties.

From the studio to the stage

Some are afraid virtual artists will wholly replace genuine ones even on stage. And to be sure, some AI artists have struck gold. The hologram Hatsune Miku used Vocaloid software to ‘perform live’ to millions of fans worldwide.

The singer-fashion-brand-influencer Lil Miquela ‘attended’ Prada’s AW18 fashion show and today has nearly 175,000 monthly listeners on Spotify. AI singer-songwriter Yona has also ‘performed’ at Montreal’s MUTEK music festival.

The abovementioned starlets are however still anomalies, not the norm. Furthermore, while real artists can sometimes be demanding and troublesome, pure AI artists are not problem-free either. Capitol Records quickly dropped the world’s first robot rapper FN Meka following criticisms of stereotyping and disputes around compensation to the contributing artists.

When asking the fast-adapting youth for their future views, real artists’ allure seems to last. The New York Times found that while the young are intrigued by new opportunities, they feel genuine loyalty toward the artists. In a tone strikingly different from the Napster era, they even seem to worry about the industry and the labels, recognizing their contribution.

From a menace to maestro: opportunities for independent artists

Banging out drum solos is getting easier

Seen from another angle, the AI shockwaves ripping through the music industry are not trumpeting a doomsday but forging an evolution, especially for aspiring and independent artists.

Today’s artists can still be armed with guitars or drumsticks but algorithms are increasingly a part of the toolbelt. Many pioneers compare AI to the electric guitar or sampling; once scorned, now commonly accepted and even revered.

While the digital revolution removed the entry barrier of instruments and distribution, you still needed some music theory and playing skills. AI kicks down even these remaining barriers, emphasizing curiosity and discernment. With his exceptional taste but limited technical skills, Superstar producer Rick Rubin has long insisted the former is far more critical in minting hits than the latter.

As AI closes doors for some, it can open them for others. Some guitarists and drummers may be displaced, but in their place springs up a new generation of multi-instrumentalist home producers. The impact of this shift is new vocabularies and novel sonic aesthetics, leading to a new ‘divergence and renaissance in music’ as my friend and musician Nathaniel Perez put it.

New sounds, new scapes

A prime example of the new GenAI Sound is Taryn Southern, who already 2017 launched an AI-backed album, ‘I AM AI’, using Amper software to create the instrumentals she arranged behind her voice. The Cotton Modules used AI to create unique soundscapes for their debut album, while 27 Club used a fusion of AI and human vocals in their ‘Lost Tapes of the 27 Club’ release.

Apart from new sounds, whole new music experiences emerge. Dadabots created a 24/7 death metal stream nonstop on YouTube, something no human band would dare to try. Brian Eno's Reflections app plays auto-generated, seasonally-changing tunes that never repeat – a perfect soundtrack for meditation, yoga and creating art.

While many accuse AI of being a mere aggregator, it contains real power in its ability to shatter our creative comfort zones. An AI assistant that consumes entire canons and regurgitates them into new creations can catalyze musical innovation.

As already mentioned, this innovative spirit also extends to the music business. Grimes' Elf.Tech creates new crowdsourced revenue streams while cultivating fan loyalty. Producer Danny Wolf uses AI for everything from album concepts to track listings and from marketing campaigns to budget allocation.

Direct artist-fan platforms like Bandcamp and TikTok supercharge all these opportunities. They bypass industry gatekeepers and empower artists to earn and expose their music based on merit.

And for listeners? A vast, vibrant array of music that the Titans don’t puppet. That’s musical renaissance turbo-charged by AI.

Striking the right chord: balancing promise and peril of AI in music

Navigating the AI landscape is a challenging task. It demands taming some formidable ethical beasts and tackling copyright conundrums while artists risk being enveloped by a tsunami of AI-produced content.

Copyright laws and AI are still murky. The forces behind the Human Artistry Campaign have penned a creative manifesto demanding that musicians, tech creators, and regulators protect human rights in music. According to them, new copyright/IP exemptions shouldn’t allow AI developers to profit off human creators without consent or compensation.

The U.S. Copyright Office has already declared as uncopyrightable any AI art, music included, generated via user prompts. This is a potential damper on the commercial viability of AI-produced music. At the same time, one has to wonder what level of AI use triggers these clauses in an era where AI is used increasingly as a part of every project, even if not the whole.

VR guru Jaron Lanier has pitched the concept of ‘data dignity,’ where creators get paid for all AI-utilized content. While it’s a commendable idea, how exactly to apply it is still as clear as mud. Some propose segregating human and AI music, putting AI creations in one bucket and human products in another. But as AI-human boundaries blur, this will likely prove a Sisyphean task.

And yet, AI does have an apparent Achilles' heel. A Drake or other famous soundalike might score novelty points, but there’s no evidence that they might thrive commercially. After all, fans flock to the artist and their persona, not just their vocal cords.

Ultimately, AI shouldn’t be considered a marauder but a partner, at best augmenting music's richness and reach. The best music always has a human heartbeat, but AI can open new blood flow paths.

As I tune into the AI artist Holly Herndon while writing this, I hear a resonating and reassuring proof that human creativity is not just surviving but thriving.

Would you like a keynote presentation on this or a related topic?

Drop me a line at sami@samitamaki.com – and let’s set one up!

For all strategy, consulting, brand and design engagements, contact me at sami.viitamaki@bond-agency.com. We’re always ready to help brands thrive.